America's AI Race

While there are clear advantages to being first, this is not a winner-take-all scenario like the space race.

This past summer, the White House unveiled Winning the Race: America’s AI Action Plan, a strategic blueprint accompanied by executive orders aimed at securing U.S. “global dominance” in artificial intelligence. The plan aspires to “set global AI standards and reap broad economic and military benefits” to unlock substantial economic and military advantages. While visionary in scope, it is also grounded in pragmatic steps, including the rapid expansion of AI infrastructure, such as data centers and power capacity, as well as equipping Americans with the skills needed to thrive in an AI-driven economy. Additionally, boosting U.S. technology exports will help cement American dominance on the world stage. A robust and secure AI ecosystem clearly serves the national interest, and many of these proposals support that aim. Still, the plan comes with trade-offs, notably, the environmental cost of large-scale data center development.

This plan primarily aims to advise on foundational AI models procured by the federal government. While the focus is on government procurement, the influence of such requirements often extends beyond federal use, given the government's significant purchasing power. Notably, the regulation targets the foundation models themselves, e.g. ChatGPT, Gemini, and Claude, rather than the applications built on top of them. Interestingly, the regulations do not explicitly mandate the use of American-made models. In fact, a provision requiring NIST to assess Chinese models for alignment with communist values suggests that, at least in principle, foreign models could be considered acceptable for federal use.

The document calls on foundation model developers to “acknowledge uncertainty where reliable information is incomplete or contradictory.” While this is an admirable goal for both human users and AI experts, it’s not currently feasible. Modern generative AI models lack a reliable method for estimating uncertainty. Unlike traditional machine learning models, such as logistic regression, which can output interpretable confidence scores, foundational models are essentially probabilistic text generators. Their responses are shaped by the frequency and context of word associations, not by facts. Thus, they do not "know" the truth; rather they predict the most likely following sequence of words based on patterns in their training data. Additionally, because the training data is internet-scale, they often include unreliable, outdated, and contradictory information, all of which the model absorbs indiscriminately. Even if a generative model could produce a confidence score, it would reflect the internal statistical structure of its training corpus, not the likelihood that a given response is accurate.

Likewise, foundation models lack explicit memory and source attribution, both during training and when generating responses. They cannot identify where a piece of information came from, unlike a human who might say, “I read it in Civitas Outlook, so it’s probably reliable.” Instead, model knowledge is embedded across billions of parameters, with no clear source for a specific fact. This is a core aspect of the transparency problem in LLMs, and addressing it is the focus of ongoing work in explainability and interpretability research. However, complex systems will always be complex to understand in full, and this will remain a problem. Compounding the issue, the human feedback used to fine-tune these models often favors responses that are confident, concise, and direct. As a result, more cautious or uncertain answers, which explicitly acknowledge ambiguity, tend to be rated lower, subtly training the model to hedge less and present its output with greater (often unwarranted) certainty.

For similar reasons related to transparency, the statement that “our AI systems must be free from ideological bias and be designed to pursue objective truth rather than social engineering agendas when users seek factual information or analysis” raises significant challenges. Even if developers avoid deliberately fine-tuning models to introduce ideological bias, models inherently reflect and often amplify the biases present in their training data. These datasets are far too large to audit or scrub biases meaningfully, and thus, the models will still reflect the prevailing ideologies embedded in the data. Most of this data likely comes from “WEIRD” sources: Western, educated, industrialized, prosperous, and democratic societies. Engineers may deliberately remediate biases, for example, by trying to remove the assumption that an engineer is male by oversampling data of women engineers or remediating racial biases by providing positive examples from those demographics. More controversially, developers may deliberately gate answers with safety filters, where certain answers are gated as dangerous or hate speech.

The strategy document asserts that “AI is far too important to smother in bureaucracy at this early stage, whether at the state or Federal level.” Currently, the U.S. lacks comprehensive federal regulation of AI, though there are several executive orders and agency-specific guidelines. Also, a few targeted bills have been introduced in Congress, addressing specific concerns such as the use of AI in political advertising and the protection of individuals' voices and visual likenesses from unauthorized replication. The document suggests federal AI funding should not be directed to states with “burdensome AI regulations that waste these funds," while still respecting states' rights to enact “prudent laws that are not unduly restrictive to innovation." The terms “burdensome" and “unduly restrictive" are vague and open to interpretation, potentially allowing the federal government to steer funding based on subjective assessments of state-level AI policy.

While not proposing formal regulations, the strategy document calls for the NIST AI Risk Management Framework to"eliminate references to misinformation, Diversity, Equity, and Inclusion, and climate change.” Climate change is not actually mentioned in the framework, although it does address the energy demands of AI systems and emphasizes the importance of environmental sustainability. References to diversity in the framework are framed broadly, encompassing “demographics, disciplines, experience, expertise, and background.” The objection is likely aimed primarily at demographic diversity, though the other dimensions, such as disciplinary and experiential diversity, are clearly essential for building robust and effective AI systems. Additionally, the strategy expresses support for training and employing scientists and experts. While there is a clear push to expand AI training, the document suggests this may occur outside of traditional university channels.

The strategy places strong emphasis on accelerating the resources required for AI, including large-scale infrastructure projects such as building data centers and upgrading the power grids needed to support them. It declares, “Build, Baby, Build!” and calls for reducing bureaucratic hurdles, particularly environmental regulations. The plan also advocates investment in advanced chip manufacturing and proposes opening federal land for the construction of data centers. Upgrading the power grid is a longstanding need, according to the non-partisan National Conference of State Legislatures, and not solely driven by AI demands. Still, the scale of this undertaking is enormous, with estimated costs ranging from billions to trillions of dollars. The document does not specify who will bear that financial burden.

Building data centers and the infrastructure needed to power them carries significant environmental impacts, including greenhouse gas emissions. These facilities require substantial land and enormous volumes of water for cooling, drawing directly from local ecosystems. Additionally, the disposal of electronic waste, including old servers that may contain hazardous materials like lead and mercury, often occurs within nearby communities. Noise pollution from servers, cooling systems, and backup generators can further affect local quality of life. Supporting infrastructure, such as roads and telecommunications networks, must also be upgraded to accommodate these centers.

On the other hand, data centers contribute to local economies by creating jobs during both construction and ongoing operations, and by generating increased tax revenue. They can also attract tech companies, including those not involved in AI, that prefer to be co-located near these facilities, bringing additional employment opportunities and economic activity. Given these trade-offs, the federal planning efforts should provide communities with meaningful input into what gets built in their regions, and allow them to balance the environmental burdens and the economic benefits. While increasing infrastructure, such as data centers, is essential, a more immediate step may be to revisit and streamline existing regulations that hinder AI deployment, for example in healthcare. Removing unnecessarily restrictive policies could help accelerate adoption more effectively than infrastructure investment alone.

The strategy document also outlines a goal to export the full American AI tech stack, including hardware, models, software, applications, and standards. While much of this technology has already been accessible internationally, the most advanced AI chips were previously restricted under export controls imposed by both the Biden and Trump administrations to prevent other nations, particularly China, from competing with the United States commercially and militarily. Domestically, the government also aims to expand the applied use of AI in areas like autonomous vehicles and unmanned aerial systems.

The drive to “win” in AI reflects a broader ambition to enhance American soft power and secure the economic advantages of having AI technologies developed by U.S. companies. Though often framed as the U.S. versus the world, the real competition is primarily between the U.S. and China, with the European Union largely sidelining itself through a highly restrictive regulatory approach. Beyond the obvious benefits, such as job creation and attracting top global talent, there is significant value in achieving first-mover advantage and capturing market share. This includes the ability to lock in customers early, shape global standards to align with American technologies, and establish dominant market positions. Additionally, domestic development also strengthens national security and supply chain resilience by reducing U.S. reliance on foreign AI systems, especially from adversarial nations that may restrict access. Building foundational models within the United States further supports industry partnerships, making it easier for sectors like healthcare to collaborate with AI providers while ensuring regulatory alignment and data governance within U.S. jurisdiction.

However, framing AI development as a "race" is somewhat misleading. While there are clear advantages to being first, this is not a winner-take-all scenario like the space race. The language of a "race" may be useful for rallying public and political support, but it oversimplifies the situation. Unlike the Cold War-era rivalry between national space agencies like NASA and Roscosmos, current AI advancements are mostly being driven by private companies, not governments. As demonstrated by the rapid growth and diversity of U.S.-based AI firms, developing powerful foundation models no longer requires the full resources of a nation-state. In this context, allocating hundreds of millions of dollars in federal funding to major companies like Anthropic, Google, OpenAI, or xAI risks distorting the market and prematurely stifling healthy competition in a field where innovation thrives on diversity and openness.

While the government asserts that it is a "national security imperative for the United States to achieve and maintain unquestioned and unchallenged global technological dominance," that is not feasible. “Winning” is not a symbolic victory like being “first to the moon,” but rather a temporary lead in market share within a rapidly evolving global ecosystem. In reality, there will be multiple winners and frontrunners across different domains, much like the ongoing, multipronged race to cure cancer. The United States will undoubtedly wield outsized influence, but history shows that market leadership by one nation is rarely permanent. The U.S. was once the undisputed leader in steel, automobile, and telecommunications manufacturing, until regulation, global competition, and external innovation shifted the balance. The same will inevitably happen in AI.

Additionally, the AI ecosystem is far too complex and specialized for any single country to dominate entirely. Foundation models span a range of niches, language, code, drug discovery, etc, compounded by the globally interconnected nature of research and development. In the tech industry, national borders are porous, and progress will likely be distributed and collaborative.

Rachel Lomasky is Chief Data Scientist at Flux, a company that helps organizations do responsible AI.

Economic Dynamism

.jpg)

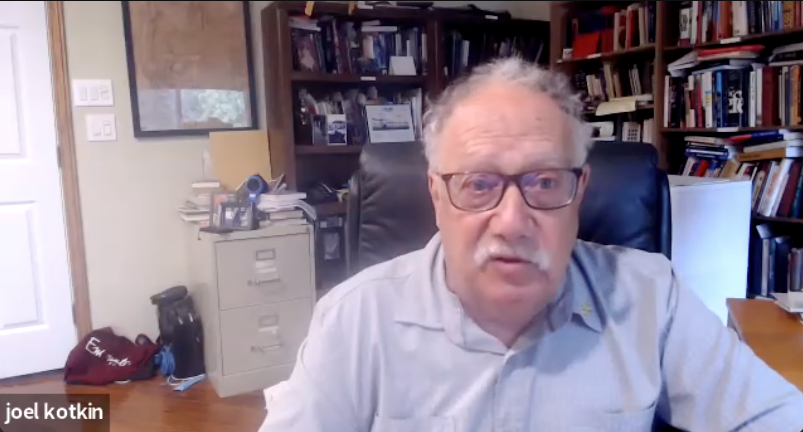

Do Dynamic Societies Leave Workers Behind Culturally?

Technological change is undoubtedly raising profound metaphysical questions, and thinking clearly about them may be more consequential than ever.

The War on Disruption

The only way we can challenge stagnation is by attacking the underlying narratives. What today’s societies need is a celebration of messiness.

Unlocking Public Value: A Proposal for AI Opportunity Zones

Governments often regulate AI’s risks without measuring its rewards—AI Opportunity Zones would flip the script by granting public institutions open access to advanced systems in exchange for transparent, real-world testing that proves their value on society’s toughest challenges.

Downtowns are dying, but we know how to save them

Even those who yearn to visit or live in a walkable, dense neighborhood are not going to flock to a place surrounded by a grim urban dystopia.

The Housing Crisis

Soaring housing costs are driving young people towards socialism—only dispersed development and expanded property ownership can preserve liberal democracy.

Oren Cass's Bad Timing

Cass’s critique misses the most telling point about today’s economy: U.S. companies are on top because they consistently outcompete their global rivals.

Blocking AI’s Information Explosion Hurts Everyone

Preventing AI from performing its crucial role of providing information to the public will hinder the lives of those who need it.

.jpeg)

.jpg)